This article is about the “Digit Recognizer” challenge on Kaggle. You are provided with two data sets. One for training: consisting of 42’000 labeled pixel vectors and one for the final benchmark: consisting of 28’000 vectors while labels are not known. The vectors are of length 784 (28×28 matrix) with values from 0 to 255 (to be interpreted as gray values) and are supposed to be classified as to what number (from 0 to 9) it represents. The classification is realized using SVMs which I implement with kernlab package in R.

This article is about the “Digit Recognizer” challenge on Kaggle. You are provided with two data sets. One for training: consisting of 42’000 labeled pixel vectors and one for the final benchmark: consisting of 28’000 vectors while labels are not known. The vectors are of length 784 (28×28 matrix) with values from 0 to 255 (to be interpreted as gray values) and are supposed to be classified as to what number (from 0 to 9) it represents. The classification is realized using SVMs which I implement with kernlab package in R.

Three representation of the data set

The pre- and post-processing in all cases consists of removing the unused (white – pixel value = 0) frame (rows and columns) of every matrix and finally to scale the computed feature vectors (feature-wise) to mean 0 and standard deviation 1. I gave three representations a try:

- [m] Eight measurements are extracted: (ratio width/height, mean pixel value, vertical symmetry, horizontal symmetry, relative pixel weight for all four quarters).

- [a] Resizing to 10×10 and then just iterate through pixels by row to get a vector of length 100.

- [n] Dichromatize matrix. Now every black pixel can have 2^8 different neighbourhood value combinations. Each is represented in a vector of length 256 which keeps the frequency of the occurences.

To my surprise those three representations performed reverse to what I expected. Best performance showed [a], then [m] and way off was [n]. In hindsight (after “snooping” the data) it makes some sense. I expected more from [n] because I thought this representation might keep track well of details like curvature, straight lines and edges. I still think this approach is promising but probably the neighbourhood has to be increased to distance 2 and post-processed appropriately.

About the classifiers

First of all, as you know, an SVM can only classify into two categories. The classical approach for differentiation of N (10 for this application) categories is to train Nx(N-1)/2 classifiers. One classifier per unordered pair of different categories (one SVM for “0″ vs. “1″ and for “0″ vs. “2″ etc.). Later the input is then fed to all Nx(N-1)/2 classifiers and the category which got chosen most often is then considered to be the correct one. In case several categories share the maximum score one of it is chosen at random.

As for the kernels I sticked to the standards – Gaussian kernel and polynomial kernel (also linear kernel – polynomial kernal of degree = 1). The first grid search I performed for (Gaussian kernel) C x σ in {1,10,100,1000,10’000} x {1,0.1,0.01,0.001,0.0001} and for (polynomial kernel) C x deg in {1,10,100,1000,10’000} x {1,2,3,4,5}. All results are available as CSVs on GitHub.

The grid search was performed for each parameter combination on 10 randomly sampled subsets of size 1000 using 5-fold cross validation on each and then taking the average. By doing so I tried to speed up the processing while keeping high accuracy. In hindsight I should have especially reduced the subsamples from 10 to 2 or 1, given how fast it became clear which kernel / feature combinations would do a reasonable job. And those doing a bad job took way way way longer to finish. Also the number of support vectors exploded as you would expect because the algorithm took a do-or-die attitude to fit the data.

> cor.test(result.rbfdot.a[,"t.elapsed"],result.rbfdot.a[,"cross"])

Pearsons product-moment correlation

data: result.rbfdot.a[, "t.elapsed"] and result.rbfdot.a[, "cross"]

t = 43.2512, df = 1123, p-value < 2.2e-16

alternative hypothesis: true correlation is not equal to 0

95 percent confidence interval:

0.7675038 0.8114469

sample estimates:

cor

0.7904905

> cor.test(result.rbfdot.a[,"t.elapsed"],result.rbfdot.a[,"SVs"])

Pearsons product-moment correlation

data: result.rbfdot.a[, "t.elapsed"] and result.rbfdot.a[, "SVs"]

t = 146.2372, df = 1123, p-value < 2.2e-16

alternative hypothesis: true correlation is not equal to 0

95 percent confidence interval:

0.9716423 0.9774934

sample estimates:

cor

0.9747345

Especially calculations of linear kernel (polynomial of degree 1) for data representation of type [m] took forever. Which makes sense because this representation boils down to a vector of length eight which is quite dense and hence linear separability is not to be expected and the surface starts occilating to reach an optimum. But also for higher degrees polynomial kernels for this representation didn’t perform very impressing in comparison, so I just skipped it. Similar story for data representation [n] which took long to compute, while the performances where partially quite okay but still unimpressing in comparison to polynomial for [a] and Gaussian for [a] and [m] – hence I skipped the computation of [n] alltogether after few classifiers had been trained.

The final round: comparing rbfdot for [a] and [m] with polydot for [a]

The following table/matrix showes us the number of classifiers for a given data set / kernel combination which yielded a cross validation error lower than 1% and took on average less than 5 seconds to compute:

# performance.overview() is located on GitHub in scripts/helpers.r

> performance.overview(list(

"polydot [a]" = result.polydot.a,

"rbfdot [a]" = result.rbfdot.a,

"rbfdot [m]" = result.rbfdot.m),

v="cross", th=0.01, v2="t.elapsed", th2=5)

0:1 0:2 1:2 0:3 1:3 2:3 0:4 1:4 2:4 3:4 0:5 1:5 2:5

polydot [a] 25 21 7 25 15 13 25 19 20 25 16 15 20

rbfdot [a] 15 14 5 15 8 5 15 11 13 15 9 8 6

rbfdot [m] 7 0 0 0 0 0 0 0 0 0 0 0 0

3:5 4:5 0:6 1:6 2:6 3:6 4:6 5:6 0:7 1:7 2:7 3:7 4:7

polydot [a] 0 23 20 23 20 25 20 10 25 15 15 20 20

rbfdot [a] 0 15 12 14 9 15 14 5 15 11 11 9 7

rbfdot [m] 0 0 0 8 0 0 0 0 0 0 0 0 0

5:7 6:7 0:8 1:8 2:8 3:8 4:8 5:8 6:8 7:8 0:9 1:9 2:9

polydot [a] 25 25 15 1 3 0 15 0 14 10 25 15 17

rbfdot [a] 15 15 13 4 5 0 9 2 13 13 15 12 14

rbfdot [m] 0 25 0 0 0 0 0 0 0 0 0 7 0

3:9 4:9 5:9 6:9 7:9 8:9

polydot [a] 8 0 13 25 0 1

rbfdot [a] 4 0 10 15 0 5

rbfdot [m] 0 0 0 25 0 0

Not just shows polydot for [a] on a whole a more promising performance. It almost seems as if the performance of rbfdot is basically a subset. In only one case rbfdot shows for [a] and categories “5″ vs. “8″ a classifier doing a better job than those found for polydot and [a] in the same case. Hence my decision to stick with polydot and data set representation of type [a] in the end.

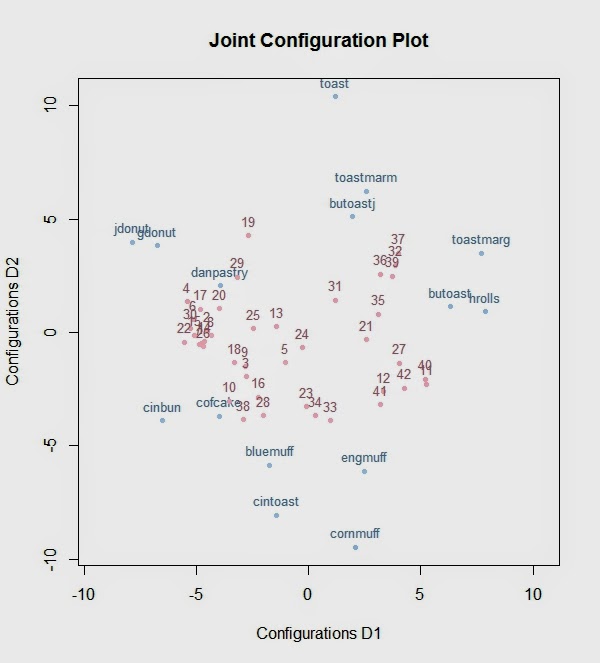

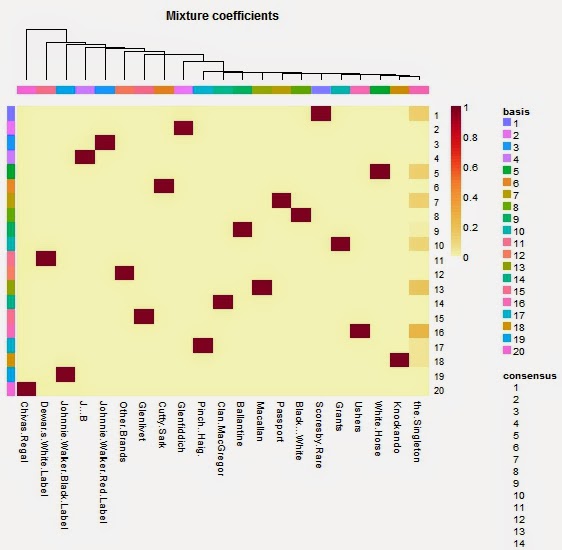

The grids searched and found

What I am inclined to learn from those heatmaps is that on one hand optimal parameter combinations are not entirely randomly scattered all over the place but on the other hand it seems that if best performance is seeked, then one has to investigate optimal parameters individually. In this case I took the best performing classifier configurations (C and degree) with a low empirical computation time and refined C further.

And at the end of the day …

Now with the second grid search result for data set type “a” and polynomial kernel I gave it a try on the test data set and got a score of 98% plus change which landed me rank 72 of 410. The air is getting quite thin above 98% and I would have to resort to more refined data representations to improve the result. But for now I am happy with the outcome. But I guess I will sooner or later give it a second try with fresh ideas.

Expectable out of sample accuracy

I was wondering what accuracy I could expect (y-axis of the chart) from a set of classifiers (10×9/2 as ever) if I assume a homogenous accuracy (x-axis of the chart) for a classifier which is in charge of an input and a random accuracy for the others. I took several runs varying the outcome probability for the “not-in-charge-classifiers” using a normal distribution with mean 0.5 truncated to the interval 0.1 to 0.9 with different deviations. For symmetric reasons I assume that input is always of class “0″. Then f.x. all classifiers dealing with “0″ (“0″ vs. “1″, “0″ vs. “2″ etc.) will have an accuracy of say 94% and the other classifiers (“5″ vs. “7″, “2″ vs. “3″ etc.) will have an outcome probabilty between 10% and 90% with a mean of 50%. The results are surprisingly stable and indicate that for an expected accuracy of 95% an “in-charge-classifier”-accuracy of 94% is sufficient. Given that the average cross validation error of my final classifier set is more than 99% I could get in theory close to 100% for the overall accuracy. But the classifier configurations are – cross validation back and forth – still specialized to the training set and hence a lower true out of sample accuracy has to be expected. Also the assumption that the probabilities of correctness for the classifiers is not independent – simply because a “3″ is more similar to an “8″ than a “1″.

# simulate.accuracy.of.classifier.set() is located on GitHub

# in scripts/viz-helpers.r

result.sd015 <- simulate.accuracy.of.classifier.set(10000,0.15,6)

result.sd01 <- simulate.accuracy.of.classifier.set(10000,0.1,6)

result.sd005 <- simulate.accuracy.of.classifier.set(10000,0.05,6)

result.sd001 <- simulate.accuracy.of.classifier.set(10000,0.01,6)

plot(result.sd015, xlab="avg expected accuracy of classifier if input belongs to one of its classes",

ylab="overall expected accuracy", pch=16,cex=.1, ylim=c(0,1))

abline(v=.94,col=rgb(0,0,0,alpha=.3))

abline(h=.95,col=rgb(0,0,0,alpha=.3))

points(result.sd01, pch=16, cex=.1, col="green")

points(result.sd005, pch=16, cex=.1, col="blue")

points(result.sd001, pch=16, cex=.1, col="orange")

for(i in 1:3) {

result.sd015.const <- simulate.accuracy.of.classifier.set(10000,0.15,6,TRUE)

points(result.sd015.const, pch=16, cex=.1, col="red")

}

Code and data

All scripts and the grid search results are available on GitHub. Sorry about incomplete annotation of the code. If something is unclear or you think you might have found a bug, then you are welcome to contact me. Questions, corrections and suggestions in general are very welcome.

The post “Digit Recognizer” Challenge on Kaggle using SVM Classification appeared first on joy of data.

R-bloggers.com offers daily e-mail updates about R news and tutorials on topics such as: visualization (ggplot2, Boxplots, maps, animation), programming (RStudio, Sweave, LaTeX, SQL, Eclipse, git, hadoop, Web Scraping) statistics (regression, PCA, time series, trading) and more...