In this tutorial you will learn:

- what is a heatmap

- how to create a clean, highly customizable heatmap using heatmap.2 in the gplots package in R

- how to remove samples with poor output (not very many sequences)

- how to rearrange your samples by a metadata category

- how to make a color coded bar above the heatmap for the metadata category

You will not learn:

- how to create an OTU table

- how to choose a good color palette for your color coded bar

- why heatmaps are called heatmaps

What you will need:

- metadata table

- OTU table

- and in case it wasn’t obvious, R

Introduction

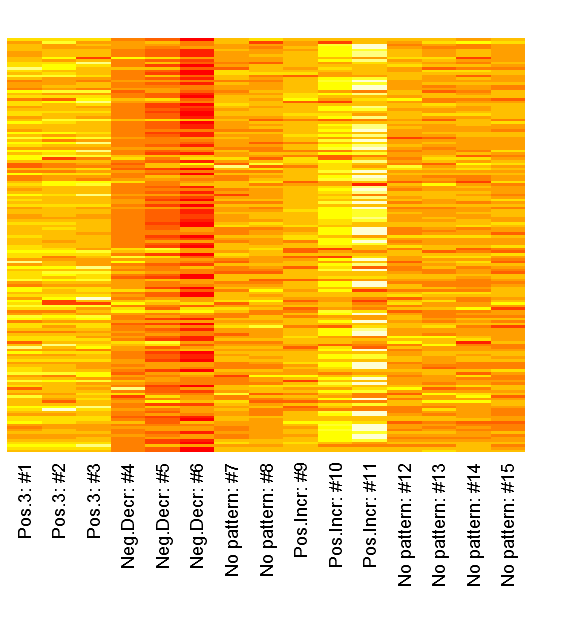

So figuring out a code from OTU table to heatmap has been my dream since we saw a cool looking heatmap in one of Dr. Lamendella’s presentations on the human gut microbiome (from the most awesome gut microbiome paper ever of 2012). It is a neat way to display a matrix of information in a color coded grid and is not in any way related to Fahrenheit or Celsius. One of the most common applications of heatmaps are for displaying results of gene expression levels in DNA microarrays.

![]()

Photo from Wikipedia: Heatmap of a DNA microarray-Unfortunately for people with red-green color-blindness, these two colors are the most commonly used for creation of heatmaps.

In our case, the heatmap will function particularly well in displaying information about OTU abundances at various taxonomical levels. Heatmaps would also serve really well when people in the lab start theoretically getting metaproteomic data. To see more examples of heatmaps used in seminal research, look at the supplementary figures of the coolest paper of 2012. This is a particularly long tutorial that has codes adapted from several sources. I will try my best to explain each step but some of the steps in certain functions are still a bit foggy and others would require unnecessary and long explanations. My original bare basic tutorial on heatmaps can be found here and it was mainly based upon this tutorial done by Nathan Yu. If you are keen on just making a quick heatmap, I suggest looking at those two links first.

Prepping the Files

You know how it goes, you need to make things in the right format before you can make the magic happen.

- Metadata File-Your sample names should listed down the first column and variables describing your samples are in the top row. You can have as many columns/variables as you like.

- OTU Table-Contrary to the metadata file, your sample names should be on the top row whereas the OTU IDs should be down the first column. When you scroll all the way to the right on your file there should be a column with the taxonomical information in each row such as

- “k__Bacteria; p__Firmicutes; c__Clostridia; o__Clostridiales; f__Lachnospiraceae; g__Coprococcus; s__Coprococcus eutactus” or

- “Root;Bacteria;Firmicutes;”"Clostridia”";Clostridiales;”"Lachnospiraceae”";”"Lachnospiraceae Incertae Sedis”

-

Unlike the columns which have a title (the sample name), this one is probably blank. Type in “taxonomy”. All lowercase and no extra frills.

-

Make sure the ID numbers/names for samples MATCH between your metadata file and OTU file.

- In reality, it will probably make things easier for you if your samples are something like “Site2Samp3Vers1″ rather than just “231″. This is one of those critical steps that absolutely must be done or else R won’t be able to match up the OTU data of each sample with the metadata of each respective sample. When creating your sample names, the concatenation will probably help you out as explained here.

-

Make sure both are saved as .csv (Comma-Separated Values) rather than .xls or .xlsx rather than Excel.

- This compacts the file and makes the importation process into R easier.

Hopefully this didn’t take you too long! Now the fun begins.

Importing the files into R

OPTIONAL: I tend to have stuff saved accidentally in my R sessions that I no longer need, so my first step of every large script is removing anything that is currently in the work session.

ls()

rm(list=ls())

ls()

OPTIONAL: I also usually change my working directory at the beginning. This makes writing pathways (or the directions for R to find files) much easier and leaves less of a chance for things to get messed up. Write in the pathway for the folder where your metadata and OTU tables are located. The one written below follows the pathway conventions of Windows PC (for Mac view http://youtu.be/8xT3hmJQskU).

getwd()

setwd("c:/your_folder/project_1")

getwd()

OPTIONAL: The second getwd() call should repeat whatever working directory you just specified. To double check that your files are in the pathway you just specified:

dir()

Now we actually import the files into R. Change the names to whatever you named your file.

dat1 <- read.csv("OTU.csv", header=TRUE,row.names=1, sep=",")

meta <- read.csv("metadata.csv", header=TRUE,row.names=1, sep=",")

Also take the time to make a folder called “output” in your working directory folder. We will be writing lots of scripts that spit out tables here and there, so it is nice to separate them out. What are you looking for? Just do it manually (you know, right click, create new folder, etc.); it’s easier.

OTU Table “Manipulation” or Cleaning

As you already know, data usually don’t arrive in the ready to analyze format. From missing values to messed up samples, now we take the time to clean our data (since the phrase “data manipulation” is not PC at all). In this process, we will split the OTU table into two segments/objects. The first object (taxa.names) will contain just the taxonomical information and the second object is a matrix(dat2) that will contain the rest of the table.

taxa.names <- dat$taxonomy

dat2 <- as.matrix(dat[,-dim(dat)[2]])

Optional: Dropping Samples with Low Observations

This section is adapted from Dr. Schaefer’s tutorial “Analyses of Diversity, Diversity and Similar Indices” class notes. The whole class syllabus is rather fascinating and proves a lot of R equivalents of processes (Unifrac analysis, nMDS, Indicator Species analysis, etc.) that we would normally do in QIIME or PC-ORD.

Although the subtitle says optional, it’s probably a good idea to to drop samples with low observations. There is a very low probability your sample only has 10 or even just 100 microbes in it.

First, create an object that contains number of observances for each sample (sums each column).

s_abundances<-apply(dat2,2,sum)

Next, split the OTU table into two matrices: one with low abundances and one with high abundances. Currently the threshold is set at 1000 but you can change the number in both lines.

bads<-dat2[,s_abundances<1000]

goods<-dat2[,s_abundances>1000]

To see how many poor samples will be removed from the OTU table:

ncol(dat2)-ncol(goods)

ncol(bads)

Now replace the old OTU table with the new one that just contains good markers.

dat2<-goods

Run these if you would like output of the good and bad markers.

write.table(badm, file="output/bads.csv", col.names=NA,row.names=TRUE, sep=",", quote=FALSE)

write.table(goodm, file="output/goods.csv", col.names=NA,row.names=TRUE, sep=",", quote=FALSE)

.

The next parts on changing to relative abundance and extraction by different taxonomic levels are based on a powerpoint in the lab module “Introduction to Metagenomic Data Analysis” by Alexander V. Alekseyenko and Susan P. Holmes (as part of the Summer Institute in Statistics and Modeling of Infectious Diseases).

Recommended: Change to Relative Abundance

Right now, observances of each OTU are probably being reported as absolute abundances. Each OTU observance is the original number generated by OTU picking and the like in QIIME. Sometimes there are specific reasons why you might want to leave the abundances as absolute abundances but the majority of the time, you will probably need to make samples comparable to each other. This requires standardization: the absolute number of observances for an OTU becomes a fraction of the total observances in that sample.

dat2 <- scale(dat2, center=F, scale=colSums(dat2))

Next, transpose the OTU table so that the row names are now Sample Names rather than OTU ID numbers.

dat2 <-t(dat2)

Data extraction by different taxonomic levels

It is much easier analyze microbial ecology data at the phylum or class level rather than each species level. However, currently the taxonomical identification data are compiled in one large undigestible lump for each OTU. To start digesting it, we need to change the format of the object that contains the taxa names.

a <- as.character(taxa.names)

This next set of code involves two functions that were included in that lab module by Alekseyenko and Holmes. These functions can split up the data at each taxonomic level so you can analyze just the different present phylums or orders. First you need to input the custom functions so that R knows how to run them. (Make sure to copy all the curly end brackets! ”}“)

extract.name.level = function(x, level){

a=c(unlist(strsplit(x,';')),'Other')

paste(a[1:min(level,length(a))],collapse=';')

}

otu2taxonomy = function(x, level, taxa=NULL){

if(is.null(taxa)){

taxa = colnames(x)

}

if(length(taxa)!=dim(x)[2]){

print("ERROR: taxonomy should have the same length as the number of columns in OTU table")

return;

}

level.names = sapply(as.character(taxa),

function(x)

extract.name.level(x,level=level))

t(apply(x, 1,

function(y)

tapply(y,level.names,sum)))

}

Next, take a closer look at the way taxonomical designations are written in your OTU table. Are there six or seven levels? If it looks like

- “Root;Bacteria;Firmicutes;”"Clostridia”";Clostridiales;”"Lachnospiraceae”";”"Lachnospiraceae Incertae Sedis”

- “k__Bacteria; p__Firmicutes; c__Clostridia; o__Clostridiales; f__Lachnospiraceae; g__Coprococcus; s__Coprococcus eutactus”

there are seven levels designated by the Root/Kingdom, Phylum, Class, Order, Family, Genus and Specie. You will need to start numbering the levels at level 2 rather than 1 in the extraction functions. However, if the beginning of your taxonomical designations are phyla then start numbering at level 1; or if phyla are not listed until the third spot in the taxonomical designations, start numbering the levels at 3.

d.phylum = otu2taxonomy(dat4,level=2,taxa=taxa.names)

d.class = otu2taxonomy(dat4,level=3,taxa=taxa.names)

d.order = otu2taxonomy(dat4,level=4,taxa=taxa.names)

d.family = otu2taxonomy(dat4,level=5,taxa=taxa.names)

d.genus = otu2taxonomy(dat4,level=6,taxa=taxa.names)

d.species = otu2taxonomy(dat4,level=7,taxa=taxa.names)

To look at the output, transpose and export.

phylum2 <-t(d.phylum)

class2 <-t(d.class)

order2 <-t(d.order)

family2 <-t(d.family)

genus2 <-t(d.genus)

species2 <-t(d.species)

write.table(phylum2, file="output/phyla.csv", col.names=NA,row.names=TRUE, sep=",", quote=FALSE)

write.table(class2, file="output/classes.csv", col.names=NA,row.names=TRUE, sep=",", quote=FALSE)

write.table(order2, file="output/orders.csv", col.names=NA,row.names=TRUE, sep=",", quote=FALSE)

write.table(family2, file="output/families.csv", col.names=NA,row.names=TRUE, sep=",", quote=FALSE)

write.table(genus2, file="output/genera.csv", col.names=NA,row.names=TRUE, sep=",", quote=FALSE)

write.table(species2, file="output/species.csv", col.names=NA,row.names=TRUE, sep=",", quote=FALSE)

These steps allow you to analyze your OTU data at various taxonomical levels within and outside of R.

Merging metadata and OTU tables

Next we will merge the metadata and OTU table together. Originally, I tried to figure out a way to avoid this step but R was not being so cooperative with reordering. We are merging the two tables so that when we rearrange the samples according to a metadata variable, the OTU table rearranges as well.

First choose which taxonomical level you want to look at. For simplicity’s sake, let’s look at phyla first. This first script merges the two tables by matching up the Sample ID/Names. The “all.x=FALSE” portion makes sure that metadata information is not included for samples that were dropped earlier from the data processing (if you skipped that step, this should not affect the output).

mergephylum<-merge(meta, d.phylum, by="row.names",all.x=FALSE)

There are multiple ways to double check the merge worked. The easiest is just by looking at the dimensions of each object involved.

dim(meta)

dim(d.phylum)

dim(mergephylum)ncol

The first number reflects the number of rows while the second refers to the amount of columns. The number of rows of the final merge should match the number of rows in the phyla file (or whichever level you are looking at). The number of columns of the final merge should be the sum of the columns in d.phylum and meta +1. Again, here is the code for output.

write.table(mergephylum, file="output/mergephylum.csv", col.names=NA,row.names=TRUE, sep=",", quote=FALSE)

Reordering by metadata variable

If you would like your heatmap in order by sample or order does not matter, skip to the next step (making the actual matrix for the heatmap).

You can reorder your samples based on a variable in your metadata. This can be anything from diet type to sample site. The variable that will direct the way your table will be reordered is not limited to just being discrete (as in group A, group B, etc.); the variable can also be continuous (blood pressure, height, pH).

For this rearrangement code, you will need the exact name of the column of the variable. Since names might have changed since importation into R, it’s best to double check. This lists the names of all the columns of your metadata.

colnames(meta)

Now use the name of the variable in question and replace “VARIABLE” in the following code.

reordermerge <- mergephylum[order(mergephylum$VARIABLE, mergephylum$Row.names),]

This will cause the table to be rearranged by increasing value of the VARIABLE (or alphabetical order) and then by the Row.names or Sample ID/Names.

Making the actual matrix for the heatmap

This next set of script splits OTU table and metadata again so that the heatmap will only display OTU data rather than metadata as well. It involves removing columns, renaming the rows and changing the format of the object to a matrix.

(OTUcol1<-ncol(meta)+2)

(OTUcol2<-ncol(reordermerge))

justOTU<-reordermerge[,OTUcol1:OTUcol2]

justOTU[1:5,1:5]

rownames(justOTU[1:10,])

rownames(justOTU)<-reordermerge$Row.names

rownames(justOTU[1:10,])

justOTU2<-as.matrix(t(justOTU))

justOTU2[1:5,1:5]

Heatmap!!!

Hurray! After all those steps we are finally to the steps of making the actual heatmap.

First install the package that contains the codes to make the heatmap. (Refresh your memory on packages here.)

install.packages("gplots")

library(gplots)

Now print out your heatmap!

heatmap.2(justOTU2,Rowv=TRUE, Colv=FALSE, scale="column", trace="none", col=redgreen, xlab="sample", ylab="phylum", margins=c(10,15))

If you are happy with this image, skip to “Export the Heatmap.“

This tutorial will be covering some of the arguments within this function for customization. In case you want to know about all the possibilities, read this documentation on heatmap.2.

Looking at some of the arguments already present:

Optional: Dendrograms

sdf

Optional: Change the color scale

repeat the code

ssdf

colors in R

Optional: Colored Side Bar

Code for the sidebar

http://www2.warwick.ac.uk/fac/sci/moac/people/students/peter_cock/r/heatmap/

Export the Heatmap

heatmap display numbers

http://mannheimiagoesprogramming.blogspot.com/search?updated-min=2012-01-01T00:00:00%2B02:00&updated-max=2013-01-01T00:00:00%2B02:00&max-results=4 multiple side bars http://www.biostars.org/p/18211/

![]()

![]()

To

leave a comment for the author, please follow the link and comment on his blog:

Learning Omics » R.

R-bloggers.com offers

daily e-mail updates about

R news and

tutorials on topics such as: visualization (

ggplot2,

Boxplots,

maps,

animation), programming (

RStudio,

Sweave,

LaTeX,

SQL,

Eclipse,

git,

hadoop,

Web Scraping) statistics (

regression,

PCA,

time series,

ecdf,

trading) and more...

%20at%20r-bloggers.com.gif)

With the help of the Quandl R package* (development version is hosted on

With the help of the Quandl R package* (development version is hosted on  This may not seem sensational for the native R guys as the community has already developed awesome R packages for these tasks to be found on CRAN, GitHub, R-forge etc. But please bear in mind that we present a template here, a module which is a compilation of these functions along with some dynamic annotations (also know as: literate programming) to be run against any time-series data - on your local computer or on the cloud. Long story short:

This may not seem sensational for the native R guys as the community has already developed awesome R packages for these tasks to be found on CRAN, GitHub, R-forge etc. But please bear in mind that we present a template here, a module which is a compilation of these functions along with some dynamic annotations (also know as: literate programming) to be run against any time-series data - on your local computer or on the cloud. Long story short: